“Foundation models let us use AI in unprecedented ways”

Everyone is talking about artificial intelligence. But are we ready for it? According to the findings of the 2024 Bosch Tech Compass survey, 64% of respondents worldwide think that AI is poised to be the most influential technology, up from just 41% last year. But only 49% feel sufficiently well-prepared for the dawning AI age. The Bosch Center for Artificial Intelligence (BCAI) is working to close this gap. Europe’s foremost industry research institution, it leads both Germany and Europe in AI-related patents. To learn more about current trends and the possibilities that AI will open up in building management going forward, we talked with Philipp Mundhenk; he project director for information and communications technology at Bosch Corporate Research.

Mr. Mundhenk, AI has been a recurring theme in literature and film for decades and has already been used for years in Bosch products. But it looks like this technology is evolving very rapidly right now. Why is it such a hot topic?

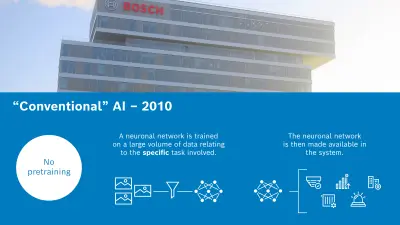

It’s true that we’re currently experiencing a revolution in AI. You could almost draw a clear dividing line between “conventional” AI and “modern” AI – despite the fact that the first of these is only 15 years old. So what has changed? The answer is foundation models, which are now letting us apply AI models much faster and more easily.

We’re witnessing a revolution in AI.

How has AI evolved in recent years?

In the past (in other words, around the year 2010) we had to train AI for specific tasks using special images. A neuronal network was taught and then made available in a system. A huge effort was required to capture and process data for this during the development process, so it was only worthwhile to do it for larger applications.

Then, about 10 years ago (around 2015), we started being able to train them better and more thoroughly for specific tasks. So-called pretraining was added, in which we used large quantities of similar data (like pictures). Afterwards, the AI could be taught to perform a particular task. This made it easier to adapt the model for a given purpose, since less application-specific data had to be captured and processed.

Today we take a different training approach, and foundation models also work differently. We train them much more broadly, by several orders of magnitude in fact, using extremely large datasets. The “conventional” approaches involved, say, a few thousand images, and pretraining is done with tens or hundreds of thousands. However, in the case of foundation models, we’re talking about many billions or even more. In addition, a wide range of modalities (like text, images, videos, and of course building data etc.) lets us train very huge networks that cover a wide range of applications that then run in the cloud.

What are the advantages of the foundation model?

Today the biggest benefit is that when we’re building an AI application we can skip the very work-intensive specialized training. We also no longer need the same enormous data volumes as before. In contrast to “conventional” AI models that are taught to perform a specific task, foundation models are trained on a wide spectrum of data and can therefore be deployed for many different tasks without the need to train them for each one separately.

This is the big revolution: today it’s possible to use neuronal networks that have already been trained elsewhere, which saves us from having to carry out large campaigns to capture all the data first. They only have to be parametrized for the task at hand. The specialized training can also be done with considerably smaller datasets than before. This way we get the product and start implementing applications with it much faster.

Megatrends in AI

Foundation models

All-purpose AI models are trained on large open-world datasets and then fine-tuned to perform specific tasks. Extremely large networks are built and trained on enormous quantities of data on a scale that previously could only be mined in the Internet. The new training approach makes it possible to pack more knowledge into models and then use them much more broadly than used to be the case.

Generative AI

An example of foundation models is generative artificial intelligence. In contrast to AI, which was developed primarily for the purposes of analyzing data, recognizing patterns, and predicting events, generative AI is aimed at creating new content on the basis of learned data and patterns. Examples include images, texts, and videos.

Large language models (LLMs)

A topic that overlaps with generative artificial intelligence is large language models (LLMs). These are trained on texts written in natural language. The most prominent examples are GPT-3.5 and GPT-4, which have become well-known under the name of ChatGPT. Large quantities of text taken from the Internet have been used to train these models, which calculate the statistically most likely next word, one after the other, to generate a string of text.

Many people are worried about the use of AI. The issue is one of trust. What contribution can Bosch make here?

Our mission is to equip Bosch products and processes across categories with secure, robust, explainable AI. We’re accomplishing this with a combination of innovative solutions, comprehensive collaboration by various teams, and above all responsible actions. In order to extend our understanding of the latest new technologies and take advantage of insights for our company and our products, we have begun collaborating with several major universities. In our Bosch Center for Artificial Intelligence, we are also combining skills and knowledge drawn from informatics, applications, and AI to build strong teams and achieve the best possible results.

Despite this focus on innovation, our foremost wish is to keep our customers’ trust by offering them real value instead of liabilities or uncertainties.

We want our AI to support people, not replace them. Bosch adheres to a strong system of values and acts both ethically and prudently. Before we introduce a new product to the market, we make absolutely sure that it works correctly in line with its specifications and is also aligned with our values. There are good reasons why our guiding principle is “invented for life”. In order to meet our commitment to topnotch quality, we have defined guidelines and criteria that all of our products must comply with.

73% of the respondents to a survey we carried out think that generative AI will have an impact as great as the Internet’s.

What exactly does this trend mean for building management?

In the context of buildings, the availability of foundation models is redefining scalability. Most buildings are designed to serve very specific purposes and, unlike cars for example, multiple identical copies of them aren’t made. It therefore isn’t feasible to train a unique AI application for each and every one. Better methods have to be found for ensuring scalability across buildings. Foundation models can be of enormous assistance for achieving this scalability, since they are trained more broadly.

We will have pretrained models at our disposal that then only have to be adapted to the particular tasks in each case. They will be based on large language models, for example. There will be building adapters that are trained once on large quantities of building data and then made available for use. It will only be necessary to parametrize them, which can be done any number of times for different buildings.

AI will be used for a multitude of functions across a wide range of building management scenarios. Examples include automated guidance along safe escape routes in the event of fire, voice interactions with a building, or a building that automatically monitors its own energy consumption, preorders fuel, and predicts and reports malfunctions in building systems.

This will be a gigantic step forward in achieving smart and networked buildings. Foundation models will enable autonomous buildings, and thanks to a new scaling approach it will be possible to automate entire fleets of buildings – without having to train them first on gigantic quantities of data.